An institutionally supported version of gen AI is now available to staff and students. What does this mean for our teaching and learning?

By Professor Alex Steel | Published 21 May 2024

As an early adopter, UNSW supports and encourages the ethical and responsible use of AI in research, learning, teaching, administration, and thought leadership. In alignment with this commitment, Microsoft’s Copilot with Commercial Data Protection (formerly Microsoft Bing Chat) has been rolled out to UNSW staff in 2024. On Monday 20 May (O-week of Term 2), the tool was switched on for our students, and official communications regarding its availability will begin from Week 1.

Microsoft Copilot is a generative AI tool which can create text and images from text or voice prompts and can summarise pdf documents. It can conduct live searches of the internet in constructing responses and provides links to the sites as references. It is currently based on the ChatGPT4 model.

What makes Ms Copilot with Commercial Data Protection different from other tools?

When students and staff log in through their work account (using UNSW zID), their data is encrypted and is not seen by humans. Prompts and answers are discarded at the end of a session. Data is not used to train AI models. These features provide added levels of privacy protection.

For more information on Copilot at UNSW see the IT site here.

So, what’s the impact on our teaching – what should we as educators do?

Let’s talk about AI with our students.

Many of our students have already become experienced users of gen AI tools, but others remain wary. Our students are also looking to us for advice on its responsible use at UNSW.

Recently, the university approved a set of UNSW principles: “Responsible and Ethical Use of AI at UNSW”. The inclusion of words ‘responsible’ and ‘ethical’ in their name is deliberate, reiterating our overarching UNSW approach.

The principles provide a wonderful way to begin a conversation with students about the responsible use of AI, and UNSW’s commitment to ethical innovation. We encourage colleagues and students to refer to these guidelines, as they underpin all other related advice and activity.

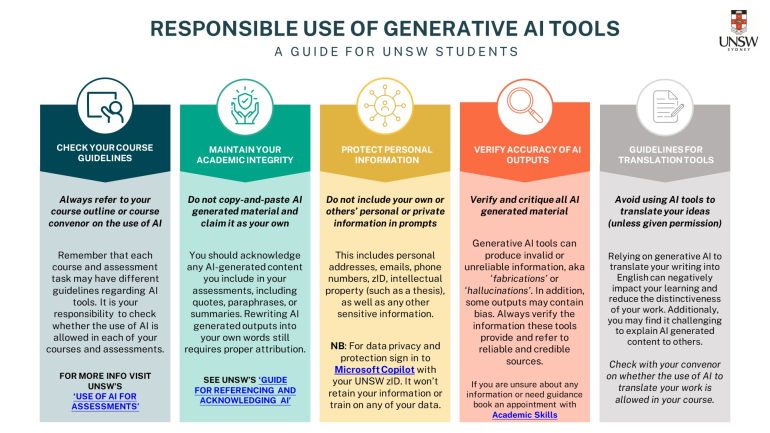

To help bring those principles down to the practical level of student learning, UNSW’s Student Learning team has produced a helpful one-page guide.

We recommend you download this slide, include it in your Moodle course materials and show it to your students at the start of term:

(Below is only a screenshot of the one-page guide. Please download it from the link above)

Both the principles and the guide are available on the Current Students site here.

The UNSW Code of Values and Conduct has also been recently released and includes reference to responsible and attributed use of AI (3.2).

Generative AI in Assessment

UNSW does not have a position banning the use of generative AI in assessment and encourages its use where appropriate (particularly now that students can access our enterprise Copilot platform).

This means that if Course Convenors consider it appropriate to restrict the use of AI in assessment, it is necessary to precisely set out the nature of those restrictions.

To assist with this UNSW has published a set of template statements to use in Course Outlines and assessment instructions. Statements to use in your Assessment Instructions. Please note that it is always helpful to elaborate on those statements to provide more detail for your specific assessment context.

The other very useful resource we can point our students to is ‘UNSW’s AI referencing guide’, also captured on the one-page guide slide above.

More information on using AI in teaching and assessment, including ideas and case studies from UNSW colleagues, can be accessed here. The PVC Education Portfolio team, in collaboration with the new Education Focussed AI Community of Practice, are currently working on migrating these materials from SharePoint to Teaching Gateway for easier accessibility. More support materials and professional development opportunities are on their way, both from the PVCE teams and from Faculty teams.

We can encourage students to experiment with Copilot and compare it with other tools (tools such as Perplexity and ChatGPT no longer require accounts enhancing anonymity).

Part of their, and our, learning is to discover when generative AI is a helpful tool, and when it misleads or is inefficient. There are important conversations to be had around when it can help learning, and when it may impede. There may be simple ways to incorporate these moments into classes, much in the same way that live internet searching has found its way into teaching.