By Diana Saragi Turnip, A/Prof. Priya Khanna Pathak and Prof. Gary Velan

Authors represent UNSW's Faculty of Medicine and Health, Nexus Program and Scientia Education Academy

Published 15 October 2024

Comparing apples and oranges: Transforming assessment at UNSW with Programmatic Assessment for Learning

One of the wicked problems with which most disciplines in higher education have grappled is ensuring that assessment decisions are meaningful and relevant, while enabling students to track their learning progress. However, the contemporary practice of combining data from different assessment types and formats within a single unit is akin to the proverbial comparison of apples with oranges.

Each fruit has its unique flavours and nutritional benefits, but mixing them in a “fruit salad” would make it difficult to appreciate their individual characteristics. This analogy perfectly illustrates the challenge faced by traditional assessment methods in higher education, where combined performance in all learning outcomes above an arbitrary pass mark is sufficient to progress or graduate, despite the potential for graduates to have a substandard level of achievement in one or more learning outcomes. To address these issues in UNSW’s assessment system, as well as to mitigate excessive workload and assessment redundancy, UNSW is exploring a transformative approach known as Programmatic Assessment for Learning (PAL).

The need for PAL

Traditional assessment paradigms in higher education have faced criticism for their unfavourable impact on the student experience, as well as for causing excessive workloads for both students and staff. The rise of generative AI, which challenges the integrity of current assessment practice, and the need for meaningful, work-relevant educational experiences necessitate a shift towards a more holistic and integrated system of assessment. UNSW is committed to enhancing the student experience through exploring innovative assessment approaches, and PAL is a key part of this endeavour.

What is PAL?

Unlike traditional high-stakes exams, PAL focuses on continuous feedback and low-stakes assessments that contribute to high-stakes decisions when aggregated (van der Vleuten et al., 2014). It systematically combines data from various assessment tasks over time to build a comprehensive view of a student’s progress towards achieving program-level outcomes.

Emerging evidence shows that programmatic assessment offers several long-term benefits, including increased competency growth, improved receptivity towards feedback, improved validation of capabilities, and reduced assessment overload when assessment tasks are carefully chosen and triangulated (Baartman et al., 2022; Khanna et al., 2023; Roberts et al., 2022; Samuel et al.,2023). However, misalignment between learning (which is longitudinal and self-directed) and assessment (which is inherently judgemental) can lead to adverse student experiences and diminishing transparency, trust and acceptability. In response, PAL aims to equip students with the ability to analyse their learning strengths and gaps, using collated assessment data to set specific learning goals and strategies (Schuwirth, L., Valentine, N., & Dilena, P., 2017).

Core principles of PAL

Based on extensive consultations with the Programmatic Assessment working group at UNSW in 2024, our version of PAL is guided by six core principles:

- L – Learning and learners first

- E – Explicit and meaningful linkages and messaging

- A – Aggregation of data

- R – Reduction in workload

- N – No high-stakes decisions based on a single task

- S – Self-regulation of learning

How does PAL work?

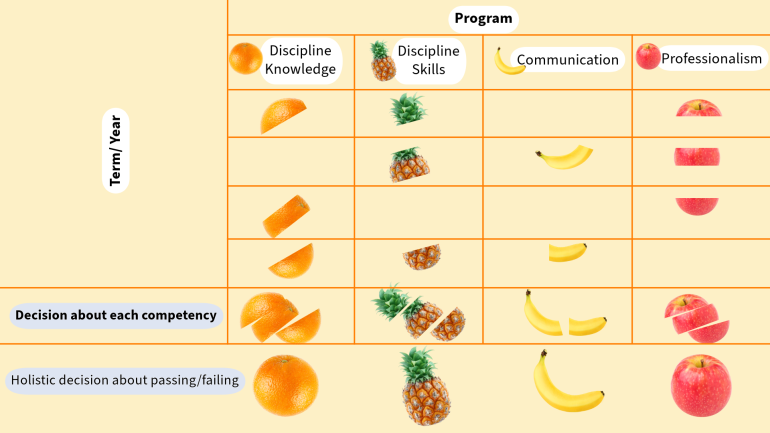

In conventional systems, different types of data (e.g., knowledge, skills, professionalism) are often combined or averaged together, leading to a single score or grade. This makes it difficult to get a clear picture of what strengths or gaps in capabilities the score reflects. PAL, in contrast, aggregates data as it relates to specific competencies or learning outcomes, ensuring that each type of data is used appropriately to make holistic decisions about student progress (van der Vleuten et al., 2014). For example, in the left panel of the figure below, data regarding learning outcomes related to knowledge, skills, communication and professionalism (represented by oranges, pineapples, bananas and apples respectively) are aggregated within each module or unit of study. A student may progress from module to module with unsatisfactory achievement in any one or more of the learning outcomes, as progression is determined by aggregating data related to all learning outcomes in a “fruit salad”. In contrast, the right panel aggregates assessment data for each learning outcome over time, thereby enabling a clear view of students’ achievement of the required standards for each outcome. Progression or graduation decisions are then made based on satisfactory attainment of all outcomes.

Benefits of PAL

Gives a holistic view of student progress: By tracking learners’ progress across multiple competencies, PAL creates a detailed picture of their development, enhancing both learning and decision-making (Roberts et al., 2022; van der Vleuten et al., 2014).

Encourages active engagement: Continuous feedback motivates students to engage actively with their learning, reducing the stress associated with high-stakes exams (Lodge et al., 2023).

Reduces workload: PAL aims to streamline assessment tasks, potentially reducing the workload for both students and staff (Schut et al., 2021).

Encourages academic integrity: With low-stakes assessments, the pressure to cheat is minimised, fostering a more honest learning environment (Lodge et al., 2023).

Enhances feedback: Aggregated assessment data provides meaningful, actionable feedback for students.

Supports self-regulation: PAL encourages students to set and monitor their learning goals, fostering self-regulation.

Challenges and enablers

Implementing PAL requires a cultural shift and significant investment in resources and technology. Key enablers include revisiting program learning outcomes, building capacity in assessment and feedback literacy, and ensuring agility in assessment policies and procedures (Baartman et al., 2022; Heeneman et al., 2021).

The shift towards PAL at UNSW represents a significant step in enhancing student learning and preparedness for the professional world. By focusing on continuous feedback and holistic assessment, PAL aims to create a more meaningful and work-relevant educational experience.

For information about PAL implementation stages, along with UNSW examples, head to this new Teaching Gateway resource page.

Next steps for UNSW colleagues

What are your thoughts on the shift towards Programmatic Assessment for Learning? How do you think it will affect your students’ learning experience and your own teaching? Would you like to trial it at one of your UNSW courses or programs?

Read our PAL white paper to find out more about PAL

Provide feedback, or express interest in piloting PAL by completing this form.

***

Diana Saragi Turnip and A/Prof. Priya Khanna Pathak are members of the UNSW Nexus Program. Prof. Gary Velan is a Fellow of UNSW Scientia Education Academy.